User:Maria Schuld/sandbox

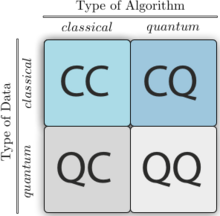

Quantum machine learning is an emerging interdisciplinary research area between quantum physics and data mining that summarises efforts to combine methods from quantum information science and machine learning.[1][2][3] One can distinguish four different ways of how to merge the two parent disciplines. Quantum machine learning algorithms can use the advantages of quantum computation in order to improve classical methods of machine learning, for example by developing efficient implementations of expensive classical algorithms on a quantum computer.[4][5][6] On the other hand, one can apply classical methods of machine learning to analyse quantum systems. Furthermore, techniques from quantum physics can be adapted for tasks like deep learning. Lastly, a quantum device can be used to recognise patterns in quantum data.

Quantum-enhanced machine learning[edit]

Quantum-enhanced machine learning refers to quantum algorithms that solve tasks in machine learning, thereby improving a classical machine learning method. So called "quantum machine learning algorithms" typically require to encode a 'classical' dataset into a quantum computer, effectively turning it into quantum information. After this, quantum information processing routines can be applied and the result of the quantum computation is read out through measuring the quantum system. For example, the outcome of the measurement of a qubit could reveal the result of a binary classification task. While many proposals of quantum machine learning algorithms are still purely theoretical and require a full-scale universal quantum computer to be tested, others have been imlemented on small-scale or special purpose quantum devices <reference to experiments?>.

Linear algebra simulation with quantum amplitudes[edit]

One line of approaches is based on the idea of 'amplitude encoding', or to associate the amplitudes of a quantum state with the inputs and outputs of computations.[7][8][9][10] Since qubits are described by complex amplitudes, this information encoding can allow for an exponentially compact representation. As an intuition, this corresponds to associating a discrete probability distribution over binary random variables with a classical vector. The goal of algorithms based on amplitude encoding is to formulate quantum algorithms whose resources grow polynomial in the number of qubits , which is a logarithmic growth in the number of amplitudes and thereby the dimension of the input.

Many quantum machine learning algorithms in this category are based on variations of the quantum algorithm for linear systems of equations,[11] which under specific conditions performs a matrix inversion with logarithmic dependence on the dimensions of the matrix. One of these conditions is that a Hamiltonian which entrywise corresponds to the matrix can be simulated efficiently, which is known to be possible if the matrix is sparse[12] or low rank.[13] Quantum matrix inversion can be applied to machine learning methods in which the training reduces to solving a linear system of equations, for example in least-squares linear regression,[8][9] the least-squares version of support vector machines,[7] Gaussian processes.[14]

A crucial bottleneck of methods that simulate linear algebra computations with the amplitudes of quantum states is state preparation, which often requires to initialise a quantum system such that its amplitudes reflect the features of the entire dataset. Although efficient methods regarding the number of qubits are known for very specific cases,[15][16] this step easily hides the complexity of the task.[17]

Quantum machine learning algorithms based on Grover search[edit]

Another approach to constructing quantum machine learning algorithms uses amplitude amplification methods based on Grover's search algorithm, which has been shown to solve unstructured search problems with a quadratic speedup compared to classical algorithms. These quantum routines can be employed for learning algorithms that translate into an unstructered search task, such as finding the closest datapoints to a new feature vector in k-nearest neighbors algorithm.[18] Another application is a quadratic speedup in the training of perceptron.[19]

Closely related to amplitude amplification are Quantum walk, offering the same quadratic speedup. Quantum walks have been proposed to enhance Google's PageRank algorithm [20] as well as the reinforcement learning model of projective simulation.[21]

Quantum sampling techniques[edit]

Sampling from high-dimensional probability distributions is at the core of a wide spectrum of computational techniques with important applications across science, engineering, and society. Examples include deep learning, probabilistic programming, and other machine learning and artificial intelligence applications.

A computationally hard problem, which is key for some relevant machine learning tasks is the estimation of averages over probabilistic models defined in terms of a Boltzmann distribution.

Sampling from generic probabilistic models, such as a Boltzmann distribution, is hard. For this reason, algorithms relying heavily on sampling are expected to remain intractable no matter how large and powerful classical computing resources become. Even though quantum annealers, like those produced by D-Wave Systems, were designed for challenging combinatorial optimization problems, it has been recently recognized as a potential candidate to speed up computations that rely on sampling by exploiting quantum effects.[22]

Some research groups have recently explored the use of quantum annealing hardware for the learning of Boltzmann machines and deep neural networks.[23][24][25][26] The standard approach to the learning of Boltzmann machines relies on the computation of certain averages that can be estimated by standard sampling techniques, such as Markov chain Monte Carlo algorithms. Another possibility is to rely on a physical process, like quantum annealing, that naturally generates samples from a Boltzmann distribution. In contrast to their use for optimization, when applying quantum annealing hardware to the learning of Boltzmann machines, the control parameters (instead of the qubits’ states) are the relevant variables of the problem. The objective is to find the optimal control parameters that best represent the empirical distribution of a given dataset.

The D-Wave 2X system hosted at NASA Ames Research Center has been recently used for the learning of a special class of restricted Boltzmann machines that can serve as a building block for deep learning architectures.[25] Complementary work that appeared roughly simultaneously showed that quantum annealing can be used for supervised learning in classification tasks.[23] The same device was later used to train a fully connected Boltzmann Machine to generate, reconstruct, and classify images that closely resemble (low resolution) handwritten digits, among other synthetic datasets.[24] In both cases, the models trained by the D-Wave 2X displayed a similar or better performance in terms of quality (i.e. in term of the values of likelihood reached).

The ultimate question that drives this endeavor is whether there is quantum speedup in sampling applications. Experience with the use of quantum annealers for combinatorial optimization suggests the answer is not straightforward.

Inspired by the success of Boltzmann Machines based on classical Boltzmann distribution, a new machine learning approach based on quantum Boltzmann distribution of a transverse-field Ising Hamiltonian was recently proposed.[27] Due to the non-commutative nature of quantum mechanics, the training process of the Quantum Boltzmann Machine (QBM) can become nontrivial. This problem was to some extend circumvented by introducing bounds on the quantum probabilities. This allowed the authors to train the QBM efficiently by sampling. It is possible that a specific type of quantum Boltzmann machine has been trained in the D-Wave 2X by using a learning rule analogous to that of classical Boltzmann machines.[24][26]

On a related proposal, quantum computing was used not only to reduce the time required to train a deep restricted Boltzmann machine, but also to provide a richer and more comprehensive framework for deep learning than classical computing.[28] The same quantum methods also permit efficient training of full Boltzmann machines and multi-layer, fully connected models and do not have well known classical counterparts.

Relying on an efficient thermal state preparation protocol starting from an arbitrary state, quantum-enhanced Markov logic networks exploit the symmetries and the locality structure of the probabilistic graphical model generated by a first-order logic template.[29] This provides an exponential reduction in computational complexity in probabilistic inference, and, while the protocol relies on a universal quantum computer, under mild assumptions it can be embedded on contemporary quantum annealing hardware.

Learning about quantum systems[edit]

The term quantum machine learning can also be used for approaches that apply classical methods of machine learning to problems of quantum information theory. For example, when experimentalists have to deal with incomplete information on a quantum system or source, Bayesian methods and concepts of algorithmic learning can be fruitfully applied. This includes machine learning approaches to quantum state classification,[30] Hamiltonian learning,[31] and learning an unknown unitary transformation.[32][33]

Classical machine learning algorithms to learn about quantum systems[edit]

A variety of classical machine learning algorithms have been proposed to tackle problems in quantum information and technologies. This becomes particularly relevant with the increasing ability to experimentally control and prepare larger quantum systems.

In this context, many machine learning techniques can be used to more efficiently address experimentally relevant problems. Indeed, the problem of turning huge and noisy data sets into meaningful information is by no means unique to the quantum laboratory. This results in many machine learning techniques being naturally adapted to tackle these problems. Notable examples are those of extracting information on a given quantum state,[34][35][36] or on a given quantum process.[37][38][39][40][41] A partial list of problems that have been addressed with machine learning techniques includes:

- The problem of identifying an accurate model for the dynamics of a quantum system, through the reconstruction of it Hamiltonian.[38][39][40]

- Extracting information from unknown states.[34][42][43][44]

- Learning unknown unitaries and measurements.[37]

- Engineering of quantum gates from qubit networks with pairwise interactions, using time dependent[45] or independent[41] Hamiltonians.

- Generation of new quantum experiments by finding the combination of quantum operations that allow for the generation of a quantum state with a certain desired property.[46]

Quantum Reinforcement Learning[edit]

Inside the field of quantum machine learning, a topic that has risen interest in the past few years is quantum reinforcement learning.[47][48][49][50][51] The aim in this research line is to analyze the behaviour of quantum agents interacting with a certain environment, which may be either classical or quantum. Depending on the chosen interaction between the quantum agent and the environment, the former will receive a different reward that will allow it, after some iterations, to achieve a policy for succeeding in its goal. A tradeoff must be balanced between exploration and exploitation. In some situations, either because of the quantum processing capability of the agent, or due to the possibility to probe the environment in superpositions, a quantum speedup may be achieved. Preliminary implementations of these kind of protocols in superconducting circuits have been proposed.

Quantum learning theory[edit]

While quantum computers can outperform classical ones in some machine learning tasks, a full characterization of statistical learning theory with quantum resources is still under development.[52] The sampling complexity of broad classes of quantum and classical machine learning algorithms are polynomially equivalent,[53] but their computational complexities need not be. The ability of quantum computers to search for the most informative samples can polynomially improve the expected number of samples necessary to learn a concept.[54]

Classically, learning an inductive model splits into a training and and an application phase. The model parameters are estimated in the training phase, and the learned model is applied an arbitrary many times in the application phase. In the asymptotic limit of the number of applications, this splitting of phases is also present with quantum resources.[55] This clear splitting allows to talk about bounds on generalization performance in the quantum case, which, in turn, makes it relevant to analyse model complexity.[56]

Implementations and experiments[edit]

Li et al. (2015) [57] did the first experimental implementation of quantum support vector machine to distinguish hand written number ‘6’ and ‘9’ on a liquid-state Nuclear Magnetic Resonance (NMR) quantum computer. The training data involved the pre-processing of the image which maps them to normalized 2-dimensional vectors, this mapping helps to represent the images as the states of a qubit. The two entries of the vector are the vertical and horizontal ratio of the pixel intensity of the image. Once the vectors are defined on the feature space the Quantum support vector machine was implemented to classify the unknown input vector. The readout avoids quantum tomography by reading out the final state in terms of direction (up/down) of the NMR signal. Neigovzen et al. (2009)[58] devised and implemented a quantum pattern recognition algorithm on NMR. They implemented the quantum Hopfield network and the input data and memorized data is being mapped to Hamiltonians, this eventually helps to use the adiabatic quantum computation.

Cai et al. (2015)[59] were first to experimentally demonstrate quantum machine learning on a photonic quantum computer and showed that the distance between two vectors and their inner product can indeed be computed quantum mechanically. Brunner et al. (2013)[60] used photonics to demonstrate simultaneous spoken digit and speaker recognition and chaotic time-series prediction at data rates beyond 1 gigabyte per second. Furthermore, Tezak & Mabuchi (2015)[61] proposed using non-linear photonics to implement an all-optical linear classifier capable of learning the classification boundary iteratively from training data through a feedback rule.

In an early experiment, Neven et al. (2009)[62] used the adiabatic D-Wave quantum computer to detect cars in digital images using regularized boosting with a nonconvex objective function. Benedetti et al. (2016)[63] trained a probabilistic generative models with arbitrary pairwise connectivity on the D-Wave quantum computer. In their paper, they showed that their model is capable of generating handwritten digits as well as reconstructing noisy images of bars and stripes and handwritten digits.

In recent years, also leading tech companies have shown interest in the potential of quantum machine learning for future technological implementations. In 2013, Google Research, NASA and the Universities Space Research Association launched the Quantum Artificial Intelligence Lab which explores the use of the adiabatic D-Wave quantum computer.[64][65] Researchers at Microsoft Research conduct theoretical research in the field of quantum machine learning but until this point no experimental implementations are known.[66]

Recently, a novel ingredient has been added to the field of quantum machine learning, in the form of a so-called quantum memristor, a quantized model of the standard classical memristor.[67] This kind of device incorporates a superconducting quantum bit together with the possibility to perform a weak measurement on the system and apply a feed-forward mechanism. Recently, an implementation of a quantum memristor in superconducting circuits has been proposed.[68] A quantum memristor would implement nonlinear interactions in the quantum dynamics which would aid the search for a fully functional quantum neural network.

References[edit]

- ^ Maria Schuld, Ilya Sinayiskiy, and Francesco Petruccione (2014) An introduction to quantum machine learning, Contemporary Physics, doi:10.1080/00107514.2014.964942 (preprint available at arXiv:1409.3097)

- ^ Wittek, Peter (2014). Quantum Machine Learning: What Quantum Computing Means to Data Mining. Academic Press. ISBN 978-0-12-800953-6.

- ^ Jeremy Adcock, Euan Allen, Matt Day, Stefan Frick, Janna Hinchliff, Mack Johnson, Sam Morley-Short, Sam Pallister, Alasdair Price and Stasja Stanisic (2015) Advances in Quantum Machine Learning, arXiv:1512.02900

- ^ see for example, Nathan Wiebe, Ashish Kapoor, and Krysta M. Svorey (2014) Quantum Algorithms for Nearest-Neighbor Methods for Supervised and Unsupervised Learning, arXiv:1401.2142v2

- ^ Seth Lloyd, Masoud Mohseni, and Patrick Rebentrost (2014) Quantum algorithms for supervised and unsupervised machine learning, arXiv:1307.0411v2

- ^ Seokwon Yoo, Jeongho Bang, Changhyoup Lee, and Jinhyoung Lee, A quantum speedup in machine learning: finding an N-bit Boolean function for a classification, New Journal of Physics 16 (2014) 103014, arXiv:1303.6055

- ^ a b Patrick Rebentrost, Masoud Mohseni, and Seth Lloyd (2014) Quantum support vector machine for big data classification, Physical Review Letters 113 130501

- ^ a b Nathan Wiebe, Daniel Braun, and Seth Lloyd (2012) Quantum algorithm for data fitting. Physical Review Letters, 109(5):050505.

- ^ a b Maria Schuld, Ilya Sinayskiy, Francesco Petruccione (2016) Prediction by linear regression on a quantum computer, Physical Review A, 94, 2, 022342

- ^ Zhikuan Zhao, Jack K Fitzsimons, and Joseph F Fitzsimons (2015) Quantum assisted gaussian process regression. arXiv:1512.03929

- ^ Aram W. Harrow, Avinatan Hassidim and Seth Lloyd (2009) Quantum Algorithm for Linear Systems of Equations, Physical Review Letters 103 150502, arXiv:0811.3171

- ^ Dominic W Berry, Andrew M Childs, and Robin Kothari (2015) Hamiltonian simulation with nearly optimal dependence on all parameters. In Foundations of Computer Science (FOCS), 2015 IEEE 56th Annual Symposium on, pages 792–809. IEEE

- ^ Seth Lloyd, Masoud Mohseni, and Patrick Rebentrost (2014) Quantum principal component analysis. Nature Physics, 10:631–633

- ^ Zhikuan Zhao, Jack K Fitzsimons, and Joseph F Fitzsimons (2015) Quantum assisted Gaussian process regression. arXiv:1512.03929

- ^ Andrei N Soklakov and Rüdiger Schack. Efficient state preparation for a register of quantum bits. Physical Review A, 73(1):012307, 2006.

- ^ Vittorio Giovannetti, Seth Lloyd, and Lorenzo Maccone. Quantum random access memory. Physical Review Letters, 100(16):160501, 2008.

- ^ Scott Aaronson. Read the fine print. Nature Physics, 11(4):291–293, 2015.

- ^ see for example, Nathan Wiebe, Ashish Kapoor, and Krysta M. Svore (2014) Quantum Algorithms for Nearest-Neighbor Methods for Supervised and Unsupervised Learning, arXiv:1401.2142v2

- ^ Ashish Kapoor, Nathan Wiebe, and Krysta Svore. Quantum perceptron models. In Advances In Neural Information Processing Systems, pages 3999–4007, 2016.

- ^ Giuseppe Davide Paparo and MA Martin-Delgado. Google in a quantum network. Scientific Reports, 2, 2012.

- ^ Giuseppe Davide Paparo, Vedran Dunjko, Adi Makmal, Miguel Angel Martin-Delgado, and Hans J Briegel. Quantum speedup for active learning agents. Physical Review X, 4(3):031002, 2014.

- ^ Biswas, Rupak; Jiang, Zhang; Kechezi, Kostya; Knysh, Sergey; Mandrà, Salvatore; O’Gorman, Bryan; Perdomo-Ortiz, Alejando; Pethukov, Andre; Realpe-Gómez, John; Rieffel, Eleanor (2016). "A NASA perspective on quantum computing: Opportunities and challenges". Parallel Computing.

- ^ a b Adachi, Steven H.; Henderson, Maxwell P. (2015). "Application of quantum annealing to training of deep neural networks". arXiv:1510.06356.

- ^ a b c Benedetti, Marcello; Realpe-Gómez, John; Biswas, Rupak; Perdomo-Ortiz, Alejandro (2016). "Quantum-assisted learning of graphical models with arbitrary pairwise connectivity". arXiv:1609.02542.

- ^ a b Benedetti, Marcello; Realpe-Gómez, John; Biswas, Rupak; Perdomo-Ortiz, Alejandro (2016). "Estimation of effective temperatures in quantum annealers for sampling applications: A case study with possible applications in deep learning". 94 (2): 022308. doi:10.1103/PhysRevA.94.022308.

{{cite journal}}: Cite journal requires|journal=(help) - ^ a b Korenkevych, Dmytro; Xue, Yanbo; Bian, Zhengbing; Chudak, Fabian; Macready, William G.; Rolfe, Jason; Andriyash, Evgeny (2016). "Benchmarking quantum hardware for training of fully visible Boltzmann machines". arXiv:1611.04528.

- ^ Amin, Mohammad H.; Andriyash, Evgeny; Rolfe, Jason; Kulchytskyy, Bohdan; Melko, Roger (2016). "Quantum Boltzmann machines". arXiv:1601.02036.

- ^ Wiebe, Nathan; Kapoor, Ashish; Svore, Krysta M. (2014). "Quantum deep learning". arXiv:1412.3489.

- ^ Wittek, Peter; Gogolin, Christian (2016). "Quantum Enhanced Inference in Markov Logic Networks". arXiv:1611.08104.

- ^ Sentıs, G.; Calsamiglia, J.; Munoz-Tapia, R.; Bagan, E. (2012). "Quantum learning without quantum memory". Scientific Reports. 2: 708. arXiv:1106.2742. Bibcode:2012NatSR...2E.708S. doi:10.1038/srep00708.

- ^ Wiebe, Nathan; Granade, Christopher; Ferrie, Christopher; Cory, David (2014). "Quantum Hamiltonian learning using imperfect quantum resources". Physical Review A. 89: 042314. arXiv:1311.5269. Bibcode:2014PhRvA..89d2314W. doi:10.1103/physreva.89.042314.

- ^ Alessandro Bisio, Giulio Chiribella, Giacomo Mauro D’Ariano, Stefano Facchini, and Paolo Perinotti (2010) Optimal quantum learning of a unitary transformation, Physical Review A 81, 032324, arXiv:arXiv:0903.0543

- ^ Jeongho; Junghee Ryu, Bang; Yoo, Seokwon; Pawłowski, Marcin; Lee, Jinhyoung (2014). "A strategy for quantum algorithm design assisted by machine learning". New Journal of Physics. 16: 073017. arXiv:1304.2169. Bibcode:2014NJPh...16a3017K. doi:10.1088/1367-2630/16/1/013017.

- ^ a b Sasaki, M.; Carlini, A.; Jozsa, R. (2001-07-17). "Quantum Template Matching". Physical Review A. 64 (2). doi:10.1103/PhysRevA.64.022317. ISSN 1050-2947.

- ^ Sasaki, Masahide (2002-01-01). "Quantum learning and universal quantum matching machine". Physical Review A. 66 (2). doi:10.1103/PhysRevA.66.022303.

- ^ Sentís, G.; Calsamiglia, J.; Munoz-Tapia, R.; Bagan, E. (2011-06-14). "Quantum learning without quantum memory". arXiv:1106.2742 [quant-ph].

- ^ a b Bisio, A.; Chiribella, G.; D'Ariano, G. M.; Facchini, S.; Perinotti, P. (2010-03-25). "Optimal quantum learning of a unitary transformation". Physical Review A. 81 (3). doi:10.1103/PhysRevA.81.032324. ISSN 1050-2947.

- ^ a b Granade, Christopher E.; Ferrie, Christopher; Wiebe, Nathan; Cory, D. G. (2012-10-03). "Robust Online Hamiltonian Learning". New Journal of Physics. 14 (10): 103013. doi:10.1088/1367-2630/14/10/103013. ISSN 1367-2630.

- ^ a b Wiebe, Nathan; Granade, Christopher; Ferrie, Christopher; Cory, D. G. (2014-05-14). "Hamiltonian Learning and Certification Using Quantum Resources". Physical Review Letters. 112 (19). doi:10.1103/PhysRevLett.112.190501. ISSN 0031-9007.

- ^ a b Wiebe, Nathan; Granade, Christopher; Ferrie, Christopher; Cory, David G. (2014-04-17). "Quantum Hamiltonian Learning Using Imperfect Quantum Resources". Physical Review A. 89 (4). doi:10.1103/PhysRevA.89.042314. ISSN 1050-2947.

- ^ a b Banchi, Leonardo; Pancotti, Nicola; Bose, Sougato (2016-07-19). "Quantum gate learning in qubit networks: Toffoli gate without time-dependent control". npj Quantum Information. 2. doi:10.1038/npjqi.2016.19. ISSN 2056-6387.

- ^ Sasaki, Masahide (2002-01-01). "Quantum learning and universal quantum matching machine". Physical Review A. 66 (2). doi:10.1103/PhysRevA.66.022303.

- ^ Sentís, Gael; Guţă, Mădălin; Adesso, Gerardo (2015-07-09). "Quantum learning of coherent states". EPJ Quantum Technology. 2 (1): 17. doi:10.1140/epjqt/s40507-015-0030-4. ISSN 2196-0763.

- ^ Sentís, G.; Calsamiglia, J.; Muñoz-Tapia, R.; Bagan, E. (2012-10-05). "Quantum learning without quantum memory". Scientific Reports. 2. doi:10.1038/srep00708. ISSN 2045-2322. PMC 3464493. PMID 23050092.

- ^ Zahedinejad, Ehsan; Ghosh, Joydip; Sanders, Barry C. (2016-11-16). "Designing High-Fidelity Single-Shot Three-Qubit Gates: A Machine Learning Approach". Physical Review Applied. 6 (5). doi:10.1103/PhysRevApplied.6.054005. ISSN 2331-7019.

- ^ Krenn, Mario (2016-01-01). "Automated Search for new Quantum Experiments". Physical Review Letters. 116 (9). doi:10.1103/PhysRevLett.116.090405.

- ^ Daoyi Dong, Chunlin Chen, Hanxiong Li, Tzih-Jong Tarn, Quantum reinforcement learning. doi:10.1109/TSMCB.2008.925743, IEEE Trans. Syst. Man Cybern. B Cybern. 38, 1207-1220 (2008).

- ^ Giuseppe Davide Paparo, Vedran Dunjko, Adi Makmal, Miguel Angel Martin-Delgado, and Hans J. Briegel, doi:10.1103/PhysRevX.4.031002 Phys. Rev. X 4, 031002 (2014).

- ^ Vedran Dunjko, Jacob M. Taylor, and Hans J. Briegel, doi:10.1103/PhysRevLett.117.130501 Phys. Rev. Lett. 117, 130501 (2016).

- ^ D. Crawford, A. Levit, N. Ghadermarzy, J. S. Oberoi, and P. Ronagh, Reinforcement Learning Using Quantum Boltzmann Machines. arXiv:1612.05695

- ^ Lucas Lamata, Basic protocols in quantum reinforcement learning with superconducting circuits, arXiv:1701.05131

- ^ Arunachalam, Srinivasan; de Wolf, Ronald (2017). "A Survey of Quantum Learning Theory". arXiv:1701.06806.

- ^ Arunachalam, Srinivasan; de Wolf, Ronald (2016). "Optimal Quantum Sample Complexity of Learning Algorithms". arXiv:1607.00932.

- ^ Wiebe, Nathan; Kapoor, Ashish; Svore, Krysta (2016). "Quantum Perceptron Models". Advances in Neural Information Processing Systems. 29: 3999–4007.

- ^ Monràs, Alex; Sentís, Gael; Wittek, Peter (2016). "Inductive quantum learning: Why you are doing it almost right". arXiv:1605.07541.

- ^ Atıcı, Alp; Servedio, Rocco A. (2005). "Improved Bounds on Quantum Learning Algorithms". Quantum Information Processing. 4 (5): 355–386. doi:10.1109/CCC.2001.933881.

- ^ Zhaokai Li, Xiaomei Liu,Nanyang Xu, and Jiangfeng Du (2015), Experimental Realization of a Quantum Support Vector Machine,doi:10.1103/PhysRevLett.114.140504

- ^ Rodion Neigovzen, Jorge L. Neves, Rudolf Sollacher, and Steffen J. Glaser (2009), Quantum pattern recognition with liquid-state nuclear magnetic resonance doi:10.1103/PhysRevA.79.042321

- ^ X.-D. Cai, D. Wu, Z.-E. Su, M.-C. Chen, X.-L. Wang, Li Li, N.-L. Liu, C.-Y. Lu, and J.-W. Pan. Entanglement-Based Machine Learning on a Quantum Computer. Phys. Rev. Lett. 114, 110504, 20 March 2015doi:10.1103/PhysRevLett.114.110504

- ^ Daniel Brunner, Miguel C. Soriano, Claudio R. Mirasso and Ingo Fischer. Parallel photonic information processing at gigabyte per second data rates using transient states. Nature Communication, 4:1364, January 2013.doi:10.1038/ncomms2368

- ^ Nikolas Tezak and Hideo Mabuchi. A coherent perceptron for all-optical learning. EPJ Quantum Technology20152:10, 30 April 2015.doi:10.1140/epjqt/s40507-015-0023-3

- ^ "NIPS 2009 Demonstration: Binary Classification using Hardware Implementation of Quantum Annealing" (PDF). Static.googleusercontent.com. Retrieved 26 November 2014.

- ^ J. Benedetti, Marcello, John Realpe-Gómez, Rupak Biswas, and Alejandro Perdomo-Ortiz, Quantum-assisted learning of graphical models with arbitrary pairwise connectivity,arXiv:1609.02542

- ^ "Google Quantum A.I. Lab Team". Google Plus. Google. 31 January 2017. Retrieved 31 January 2017.

- ^ "NASA Quantum Artificial Intelligence Laboratory". NASA. NASA. 31 January 2017. Retrieved 31 January 2017.

- ^ Wiebe, Nathan; Kapoor, Ashish; Svore, Krysta (1 March 2015). "Quantum Nearest-neighbor Algorithms for Machine Learning". Microsoft Research. Microsoft Research. Retrieved 31 January 2017.

- ^ P. Pfeiffer, I. L. Egusquiza, M. Di Ventra, M. Sanz, and E. Solano, Quantum Memristors Scientific Reports 6, 29507 (2016) doi:10.1038/srep29507

- ^ J. Salmilehto, F. Deppe, M. Di Ventra, M. Sanz, and E. Solano, Quantum Memristors with Superconducting Circuits,arXiv:1603.04487